If you’re not testing, you’re guessing. In eCommerce, that’s a costly gamble.

At Wavesy, we’ve seen brands double or even triple their conversions by applying structured, insight-driven A/B testing. This isn’t about changing button colors and hoping for magic. It’s about data, psychology, and iterative learning that compounds into massive ROI.

This guide breaks down how top eCommerce brands use A/B testing to systematically grow conversions, where other guides fall short, and how you can replicate their results.

What Is A/B Testing (and Why It’s the Smartest Way to Grow Conversions)

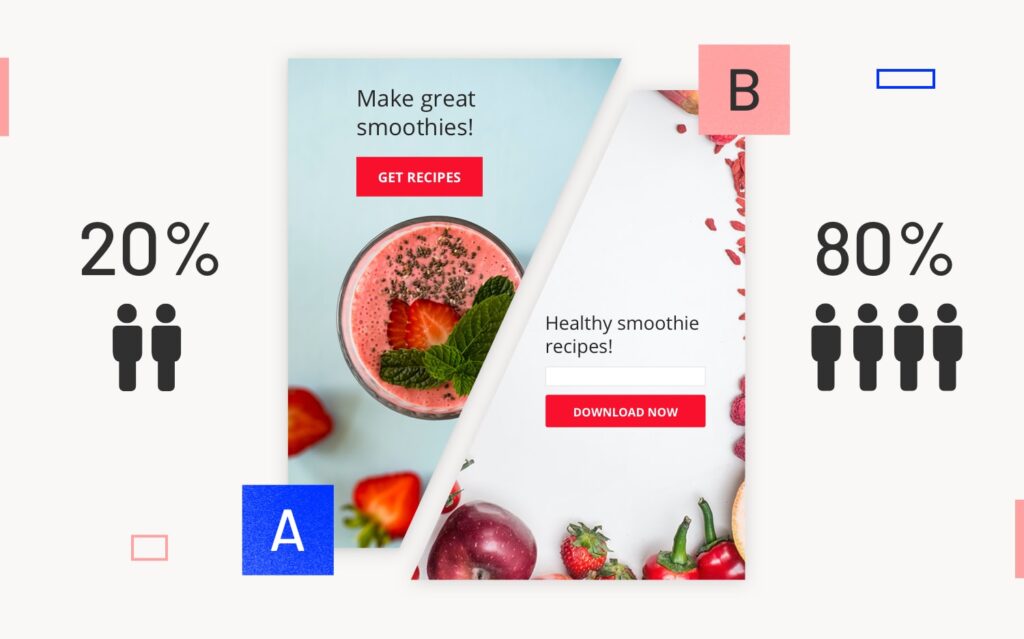

A/B testing — also called split testing — compares two versions of a page, ad, or element to see which one performs better.

You show half your traffic “Version A” (control) and the other half “Version B” (variation). The goal? Let data, not assumptions, decide what converts.

For eCommerce brands, A/B testing is one of the highest-leverage CRO tactics because it:

- Increases add-to-cart and checkout completion rates

- Validates design and messaging decisions before scaling

- Improves ad ROI by optimizing landing page alignment

- Builds confidence in what truly drives your revenue

💡 Example: A Shopify skincare brand tested its “Add to Cart” button copy. Version A said “Add to Cart,” while Version B said “Buy Now – Ships Fast.” The result? 23% more clicks and a 17% lift in completed checkouts.

What Other A/B Testing Guides Miss (and Why It Matters)

We analyzed Unbounce, Convert, and Contentful’s top-performing guides. Here’s what they missed — and what Wavesy clients gain by going deeper.

| 🚫 Missed Area | Why It Matters | 🧩 Wavesy’s Approach |

|---|---|---|

| Data-driven hypothesis creation | Random tests waste time and budget | We teach brands how to form hypotheses from analytics + heatmaps |

| Low-traffic optimization | Most guides assume enterprise-level traffic | We include alternate testing methods for smaller stores |

| Full-funnel measurement | A test that increases clicks may lower AOV | We track secondary metrics across the funnel |

| Real eCommerce use cases | Many examples are from SaaS or lead-gen | We focus on PDPs, checkouts, and upsells |

| Scalable testing culture | “Run more tests” ≠ sustainable growth | We build experimentation systems that scale |

The Wavesy Framework for eCommerce A/B Testing

A winning test isn’t luck. It’s process.

Here’s how our CRO strategists at Wavesy structure each A/B test — from hypothesis to rollout.

1. Audit Your Baseline

Before testing, you need to know what’s normal:

- Track metrics like conversion rate, AOV, cart abandonment, and bounce rate.

- Use tools like Hotjar, GA4, and Microsoft’s Clarity to identify friction points.

- Segment traffic by device, source, and intent — mobile users often behave differently than desktop buyers.

2. Form a Smart Hypothesis

Your test idea should connect a clear change to a measurable outcome. You should be able to spot the conversion killers.

Format:

“If we change X to Y for Z audience, we expect W improvement in M metric.”

Example:

If we move the “Add to Cart” button above the product fold for mobile users, we expect a 10% lift in clicks and a 5% lift in conversions.

Prioritize ideas using the PIE framework (Potential, Importance, Ease) — focusing on changes most likely to move revenue.

3. Design & Launch the Test

- Choose your tool (Convert, VWO, or Google Optimize alternatives).

- Decide traffic split (usually 50/50) and test duration (minimum 14 days or until statistical significance).

- QA across devices. Nothing kills data faster than a broken checkout flow.

- Avoid “flicker effect” — test scripts should load fast and not flash variant content.

4. Analyze the Results (and What’s Really Behind Them)

Once the test completes:

- Measure primary KPIs (conversion rate, checkout completion)

- Examine secondary metrics (bounce rate, AOV, repeat purchase)

- Confirm statistical significance before declaring a winner

👉 Pro tip: Sometimes “no difference” is a win — it means your new variant performs equally well, giving you creative flexibility for brand consistency or SEO benefits.

5. Document, Roll Out, and Iterate

The worst mistake in CRO? Not learning from your own tests.

Maintain an experiment log that includes:

- Hypothesis

- Variants tested

- Duration

- Results & significance

- Lessons learned

Then, roll out the winner sitewide and use those insights to form your next hypothesis.

Small, consistent wins compound into exponential conversion growth.

High-Impact A/B Testing Ideas for eCommerce Stores

Here’s what top eCommerce brands test most often (and where Wavesy sees the biggest wins):

| Area | 🧭 Test Example | 📊 Why It Works |

|---|---|---|

| Product Page Copy | “Handmade” vs “Sustainably Crafted” | Emotional language drives intent |

| Price Display | “$59.99” vs “$60 (Free Shipping)” | Simplifies decision friction |

| CTA Button | “Add to Cart” vs “Buy Now – Ships Fast” | Creates urgency and reassurance |

| Trust Elements | Adding review stars near CTA | Builds social proof at the conversion point |

| Checkout Flow | One-page checkout vs multi-step | Reduces abandonment |

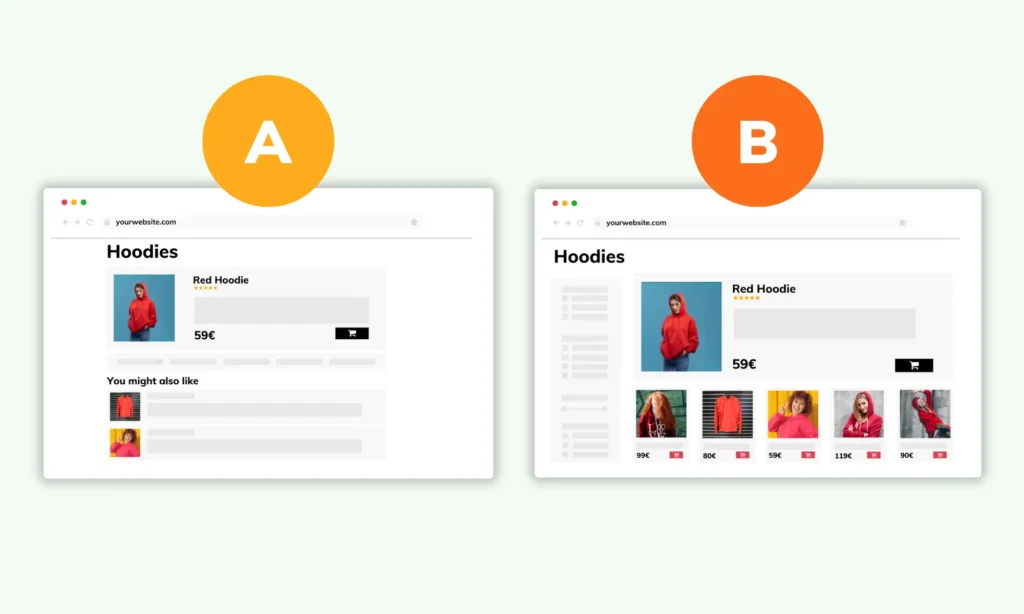

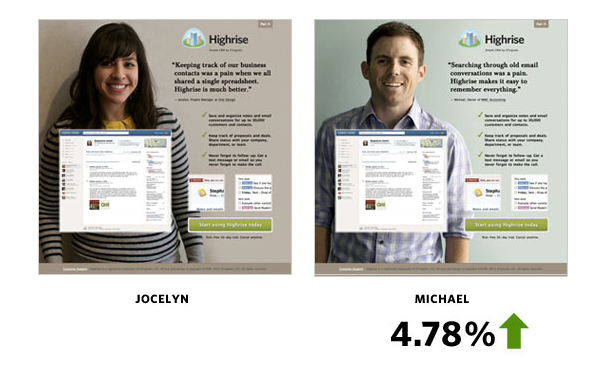

| Images | Lifestyle vs product-only shots | Contextual visuals increase confidence |

Real eCommerce Examples That Doubled Conversions

🧴 DTC Beauty Brand: Checkout Simplification

- Problem: 3-step checkout causing 65% abandonment.

- Test: Switched to a one-page checkout with autofill and progress indicators.

- Result: 48% reduction in abandonment and a 2× conversion rate improvement.

👟 Apparel Brand: Social Proof Repositioning

- Problem: High bounce rate on mobile PDPs.

- Test: Moved customer reviews above the fold with a “4.8⭐ rating” snippet.

- Result: 22% lift in add-to-cart and 18% higher average session duration.

📦 Home Goods Brand: Pricing Transparency

- Problem: Cart drop-offs after shipping costs revealed.

- Test: Added “Free Shipping Over $75” banner to PDPs.

- Result: 31% higher checkout starts, 12% higher total revenue per visitor.

🧮 Tracking Metrics That Actually Matter

Don’t stop at “conversion rate.”

Here’s what Wavesy monitors for every experiment:

- Primary metrics: conversion rate, AOV, revenue per visitor

- Secondary metrics: scroll depth, engagement, checkout rate

- Segment data: desktop vs mobile, new vs returning, paid vs organic

- Test health: sample size, significance, and variance stability

Avoid these pitfalls:

- Stopping a test too early

- Testing multiple elements at once

- Ignoring page speed (Core Web Vitals matter for conversions)

- Failing to analyze downstream effects (like repeat purchase rate)

Source: Unbounce

When You Can’t A/B Test – What To Do?

Smaller stores often lack enough traffic for statistical significance. That doesn’t mean you can’t optimize.

Alternative methods:

- Multivariate or bandit testing: Auto-allocates traffic to winners faster.

- Heatmaps & user session recordings: Show behavioral insights faster than traffic-based tests.

- Pre-launch validation: Test messaging via Meta ads before updating live pages.

- Micro-experiments: Test single-page flows with focused audiences (e.g., email traffic only).

Even without traditional A/B volume, these methods help you make data-backed improvements that compound over time.

Building a Culture of Experimentation

The most successful eCommerce brands treat CRO as a system, not a one-off project.

Here’s how Wavesy helps clients scale A/B testing long-term:

- Implement a centralized experimentation hub to log results and insights.

- Train internal teams to understand test methodology.

- Automate variant creation with modular design systems.

- Schedule biweekly “test sprints” for new hypotheses.

- Measure win rates and category insights quarterly.

When testing becomes part of your culture, growth becomes predictable — not accidental.

A great example of how using a different image can influence an A/B test. (Source: HubSpot)

10 eCommerce A/B Tests You Can Run This Month

(Quick Wins, Applies To Any Brand/Industry)

- Test “Buy Now” vs “Add to Cart” CTA copy

- Show vs hide “Free Shipping Over $X”

- Lifestyle vs product-only images on PDPs

- Price ending in .99 vs whole number

- Testimonials above vs below CTA

- Limited-time offer badge vs static pricing

- “As Seen On” media logos on homepage

- Checkout with vs without guest option

- Product video vs static image

- Email popup timing: 10 sec vs exit intent

Even if only half of these deliver modest wins, the compounding lift over a year can easily double your overall conversion rate.

Final thoughts

A/B testing isn’t just a CRO tactic — it’s your growth engine.

Every test teaches you something about your customers, and every insight compounds into long-term revenue.

At Wavesy, we don’t just run tests — we build systems that turn optimization into predictable profit.

Visit this link to book your introductory call – and only now – get 2 A/B tests for free.