You want faster test results.

You want to skip the weeks of waiting for statistical significance.

You want to test 100 variations instead of two.

Agentic synthetic testing promises exactly that: run thousands of AI-simulated users against your designs in minutes, get statistically significant results without real traffic constraints.

Meta has already deployed rich-state simulated populations across Facebook, Instagram, and Messenger, increasing fault detection by 115% and enabling over 21,000 engineers to test internally before exposing features to real users.

At Wavesy, we’ve watched the synthetic testing landscape evolve from experimental curiosity to genuine capability.

The technology delivers measurable value for specific use cases.

But it also carries a fundamental limitation that every CRO team must understand before treating synthetic results as proof.

Here’s the uncomfortable truth: real users are irrational, emotional, and distracted.

They make purchase decisions that violate their stated preferences.

They abandon carts for reasons they can’t articulate.

They fall in love with features that logic says shouldn’t matter.

AI agents, trained on the statistical center of documented behavior, cannot simulate this beautiful human chaos.

The synthetic data market is projected to reach $2.67 billion by 2030, growing at 39.40% CAGR.

In this article you will learn what synthetic testing actually does, where it excels, where it fails, and how to build a hybrid workflow that captures speed without sacrificing the human understanding that drives real conversions.

Agentic synthetic testing: Run 10k AI 'users' through your site overnight. Spot drop-offs, test variants, lift CRO 20–30%…

— Philip Doneski 🚀 (@ImDoneski) January 22, 2026

But real humans are irrational, emotional, distracted… Can silicon ever feel FOMO, trust issues, or 'just browsing' vibes? #CRO #SyntheticTesting pic.twitter.com/wSM4ZzgjOP

And if you want to go a bit deeper in the rabbit hole, I also suggest reading AI Simulation Studies from NNGroup.

TL;DR

- Synthetic testing uses AI agents with specified personas to interact with your digital products at scale, delivering results in minutes instead of weeks

- Meta’s deployment showed 38% higher code coverage and 115% more faults detected when using rich-state simulated populations

- The critical limitation: AI agents sample from historical data distributions and optimize for the median, not novel behavior or genuine innovation

- Research confirms LLM-generated personas fail to show meaningful behavioral variation and exhibit systematic biases toward positive sentiment

- Best applications: functional regression testing, accessibility compliance, filtering obviously broken designs, rapid iteration on familiar patterns

- Real users remain essential for market validation, emotional responses, novel feature adoption, and understanding irrational decision-making

What Is Synthetic Testing?

Synthetic testing is the use of AI-generated users (also called simulated users, test users, or synthetic agents) to interact with digital products at scale, replacing or augmenting traditional user research methods like A/B testing, usability studies, and focus groups.

Unlike traditional A/B testing, which requires real traffic and weeks of data collection, synthetic testing deploys AI agents with specified personas to navigate your site, complete tasks, and generate feedback in minutes.

These personas include demographic profiles, behavioral patterns, and accessibility constraints.

Tools like UXAgent enable UX researchers to automatically simulate thousands of interactions against a design before committing to human-subject studies.

The technology differs from simple automated testing (which follows predetermined scripts) because synthetic agents make dynamic decisions based on learned behavioral patterns.

They encounter unexpected states.

They evaluate options.

They choose actions the way LLMs process any other task.

Summary: Synthetic testing runs AI users against your designs at scale, delivering rapid feedback without real traffic, but the agents optimize for historical behavior patterns, not novel responses.

How Does Synthetic Testing Work?

Synthetic testing combines large language models, persona generation, and browser automation to simulate user behavior at scale.

The process involves several interconnected components that work together to create believable interactions.

Persona Generation

The system creates synthetic user profiles based on demographic specifications you define.

You might request 1,000 users distributed across age brackets, geographic regions, digital literacy levels, and accessibility needs.

Each persona receives attributes that theoretically influence how they interact with your design.

Pro tip: The more specific your persona definitions, the more useful your results. Generic “25-34 male” personas produce generic feedback.

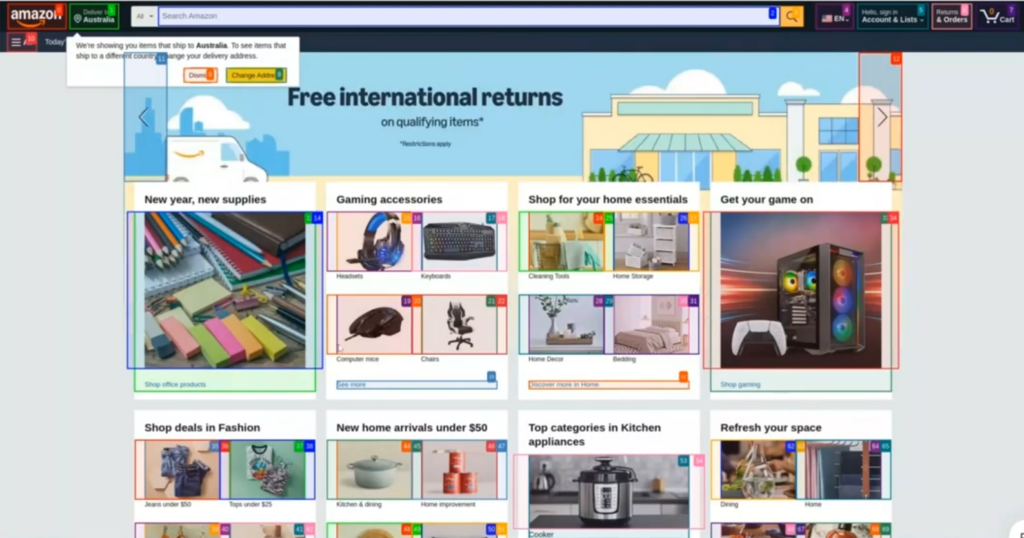

Browser Interaction

Synthetic agents connect to real browser environments (not simulations) and interact with actual rendered pages.

Systems like UXAgent use universal browser connectors that parse HTML, identify interactive elements, and execute real actions.

This means synthetic users encounter the same loading times, layout shifts, and interactive states as real users.

Behavioral Simulation

The AI agent processes each page state and decides what action to take next.

Unlike scripted testing, the agent reasons through options:

- Which link matches my task goal?

- Does this button look clickable?

- Should I scroll to find more options?

This reasoning produces natural variation in user paths.

Data Collection

The system captures both quantitative metrics (task completion rates, time on task, number of actions) and qualitative data (the agent’s reasoning at each decision point).

Some platforms allow you to “interview” the agent after task completion to understand why it made specific choices.

| Component | Function | Output |

|---|---|---|

| Persona Generator | Creates user profiles from demographic specs | Thousands of unique synthetic identities |

| Browser Connector | Parses real web pages and executes actions | Actual interaction data from rendered sites |

| LLM Agent | Reasons through tasks and makes decisions | Behavioral paths and decision rationale |

| Data Collector | Captures metrics and reasoning traces | Quantitative and qualitative research data |

What Are the Benefits of Synthetic Testing?

- Speed and scale: Run thousands of simulated users in minutes rather than weeks. Traditional A/B testing requires sufficient traffic volume and time to reach statistical significance. Synthetic testing collapses this timeline dramatically.

- Cost efficiency: Eliminate participant recruitment costs, incentive payments, and scheduling complexity. A single synthetic testing session can match the volume of months of traditional usability studies.

- Early-stage feedback: Test prototypes and designs before committing development resources. Catch obviously broken interactions and accessibility issues during the design phase rather than after launch.

- Privacy-safe testing: Meta’s deployment specifically highlights privacy as a key benefit. Synthetic populations allow teams to test features without exposing real user data or behavior patterns. According to Meta’s ICSE 2024 research, their Test Universe platform provides privacy-safe manual testing used by over 21,000 engineers.

- Accessibility validation: Synthetic agents can be configured with specific accessibility constraints (screen reader use, low vision simulation, motor impairments) to audit compliance before real users encounter barriers.

- Regression testing: After code changes, synthetic testing quickly verifies that users can still complete basic workflows. This is mostly deterministic and matches synthetic testing’s strengths.

- Iteration velocity: Test dozens of design variations in the time it takes to run a single traditional A/B test. Filter down to the most promising candidates before exposing real users to any of them.

Synthetic Testing Examples

Example 1: Ecommerce Checkout Flow Validation

An ecommerce team wants to test three checkout redesigns before committing to an A/B test.

They deploy 500 synthetic users per variation with personas matching their customer demographics.

The synthetic test reveals that one variation confuses users at the payment selection step, with 34% of synthetic users clicking the wrong element.

The team eliminates this variation before any real customer encounters the problem.

Example 2: Accessibility Compliance Audit

A financial services company needs to verify WCAG compliance across 200 pages.

They configure synthetic agents with screen reader personas and motor impairment constraints, then run automated audits across the entire site.

The system flags 47 pages with keyboard navigation issues and 12 with missing alt text, prioritized by traffic volume.

Example 3: Landing Page Rapid Iteration

A growth team wants to test 20 headline variations.

Traditional A/B testing would take months to test all variations sequentially.

Synthetic testing runs all 20 simultaneously, ranking them by simulated engagement metrics.

The team selects the top 3 for real A/B testing, reducing the testing timeline from months to days.

Platforms and Tools for Synthetic Testing

- UXAgent: Open-source LLM agent framework for usability testing. Generates thousands of simulated users with customizable personas and provides qualitative interview capabilities. Developed by researchers and presented at CHI 2025.

- Synthetic Users: Commercial platform generating AI participants for user research. Focuses on making synthetic responses indistinguishable from human responses for surveys and interviews.

- Meta’s WW Simulation Platform: Enterprise-scale simulated population system deployed across Facebook, Instagram, and Messenger. Creates synthetic users with accumulated state information through realistic interactions.

- Uxia: AI-driven usability testing tool for rapid early-signal detection. Targets quick friction identification and regression issue detection.

- Loop11: Introduced AI browser agents for running usability tests. Combines traditional testing workflows with synthetic agent capabilities.

Trends in Synthetic Testing

The synthetic data market is experiencing explosive growth.

According to Mordor Intelligence, the market is expected to grow from $0.51 billion in 2025 to $2.67 billion by 2030 at a 39.40% CAGR.

Cloud deployment accounts for 67.50% of revenue, with automotive and transportation applications growing fastest at 38.40% CAGR due to autonomous driving validation requirements.

Major acquisitions signal market maturation.

In March 2025, NVIDIA acquired Gretel for $320 million, integrating privacy-preserving generation into cloud AI services.

In January 2025, NVIDIA released Cosmos World Foundation Model for photorealistic synthetic scenes, with Uber among the first users.

Regulatory frameworks are crystallizing around synthetic data.

The EU AI Act now requires firms to test synthetic alternatives before processing personal data, making generation platforms a compliance necessity rather than an optional tool.

“Synthetic users should augment, not replace, real user input. The most effective teams use synthetic testing for rapid filtering and accessibility compliance, then validate directional findings with real users before major commitment.”

— 2025 IEEE Systematic Review of 52 peer-reviewed studies on synthetic users in human-centered design

The convergent conclusion from academic research: synthetic testing accelerates early iteration but raises persistent concerns about authenticity, variability, bias, and ethical validity.

The Critical Limitation: Optimizing for the Median

Here’s where we need to talk about what AI fundamentally cannot do.

The fundamental issue with synthetic testing emerges from what AI models actually are: probability engines trained on historical data.

When you deploy 100,000 synthetic users, you are sampling from a distribution centered on what has been common, documented, and repeated.

Real humans are irrational.

They buy things they don’t need because a friend mentioned it.

They abandon carts because they got distracted by a notification.

They choose the more expensive option because the color felt “right.”

Real humans are emotional.

They make decisions based on how a brand makes them feel, not logical feature comparisons.

They respond to urgency, scarcity, and social proof in ways that vary wildly by mood and context.

They fall in love with products for reasons they cannot explain.

Real humans are distracted.

They have 47 browser tabs open.

They’re checking their phone while “shopping.”

They forget what they came to buy and leave with something else entirely.

AI agents, trained on the statistical center of internet behavior, cannot simulate any of this.

| What Synthetic Testing Measures | What It Cannot Measure |

|---|---|

| Compliance with documented UX patterns | Novel emotional responses to innovation |

| Adherence to accessibility standards | Irrational status-driven purchase decisions |

| Completion of familiar design tasks | Adoption barriers for genuinely new categories |

| Regression from working functionality | Context-dependent cognitive biases |

| Common usability friction points | Long-tail and edge-case behavior |

Academic research on LLM-generated personas consistently documents systematic biases.

A study of financial wellbeing personas found clear age bias and divergence from real survey data.

When researchers compared LLM personas with human responses in Bangladesh, humans outperformed LLMs across all measured dimensions, with particularly large gaps in empathy and credibility.

Most critically, different personas generated by LLMs fail to show meaningful behavioral variation.

While human respondents showed meaningful variation by gender, age, and individual differences, all LLM-generated personas produced essentially identical responses.

This is the central problem for CRO teams: synthetic users do not embody genuine diversity.

They embody the model’s statistical center.

And your highest-value customers are almost never at the statistical center.

How Wavesy Approaches Synthetic Testing

At Wavesy, we believe in a user-centric approach that puts human understanding at the center of optimization.

Synthetic testing is a powerful tool.

But it’s a tool, not a replacement for understanding real human behavior.

We treat synthetic testing as a first gate, not a final judgment.

Our workflow uses synthetic agents to eliminate obviously broken designs and audit accessibility compliance, then advances the most promising candidates to real user validation.

For a recent ecommerce client, we used synthetic testing to screen 12 checkout flow variations in a single day.

The synthetic results flagged three variations with significant usability friction.

We eliminated those variations before running an actual A/B test, saving weeks of real traffic that would have been wasted on clearly inferior designs.

Our accessibility audits deploy synthetic agents configured with screen reader personas across entire sites, generating prioritized remediation lists that development teams can action immediately.

This catches 80% of compliance issues before any real user with a disability encounters them.

But we never declare winners based on synthetic results alone.

The final two checkout variations both tested well synthetically.

But the real A/B test revealed a 12% conversion difference between them.

Synthetic testing couldn’t predict which variation would trigger stronger purchase intent in actual customers.

Because purchase intent is emotional.

And emotional responses require real humans.

FAQ

Can synthetic testing replace A/B testing?

No.

Synthetic testing excels at filtering obviously broken designs and auditing compliance.

But it cannot predict genuine market adoption, emotional responses, or irrational decision-making.

Use synthetic testing to narrow candidates, then validate winners with real users.

How accurate are synthetic user personas?

Research shows LLM-generated personas exhibit systematic biases (positivity bias, age bias) and fail to show meaningful behavioral variation.

Synthetic personas approximate average documented behavior, not the full distribution of real user responses.

Your outlier customers (often your most valuable) are poorly represented.

What types of tests work best with synthetic users?

Functional regression testing (can users still complete workflows?), accessibility compliance (do screen readers work?), and filtering obviously broken designs (is this layout confusing?).

These questions have more deterministic answers that synthetic testing can reliably detect.

How much does synthetic testing cost compared to traditional testing?

Synthetic testing eliminates participant recruitment costs and dramatically reduces timeline.

A synthetic session testing 1,000 users might cost a few hundred dollars and complete in minutes.

Equivalent traditional research could cost $50,000+ and take months.

Should I worry about synthetic testing biases?

Yes.

Document the biases present in your synthetic populations and explicitly compare them against real user data in your domain.

If synthetic results diverge significantly from real user behavior patterns, increase skepticism about synthetic findings.

How do I get started with synthetic testing?

Begin with bounded applications: accessibility audits, regression testing, or filtering multiple design variations.

Avoid using synthetic testing for strategic decisions about novel features or market validation until you’ve validated synthetic results against real user behavior in your specific context.